by Jonathan A. Handler, MD, FACEP, FAMIA

Recently, I announced the free, open-source, public availability of Darth Vecdor (DV), a tool I wrote for creating graph databases (“knowledge bases” or “knowledge graphs“) using LLMs. For more info about DV, see either or both of:

Or… visit the GitHub site to see more information and download the code.

So… let’s say someone uses DV to make a knowledge base (e.g., a database containing information about thousands of lab tests) and wants to use or share it. It’s stored in a PostgreSQL database, so theoretically, the data can just be exported from the database. However in practice, some issues may make this infeasible.

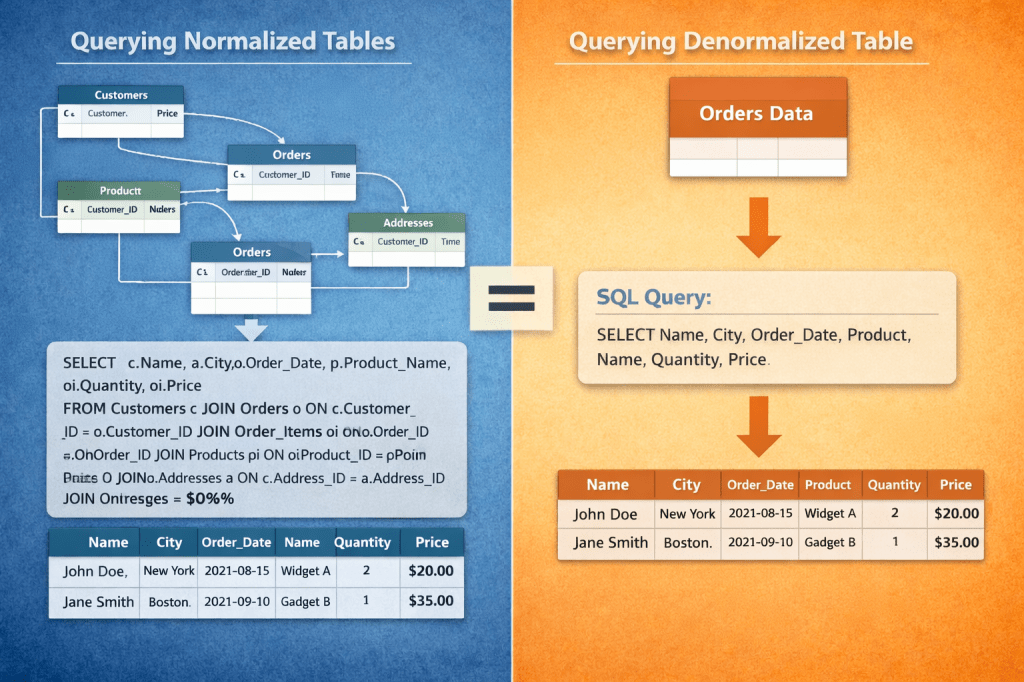

Figure 1: Database Table Design Affects the Complexity of Querying

Image by ChatGPT based on prompts by author (Jonathan A. Handler)

For example:

- Normalization: Since DV stores its data in a (mostly) “normalized” structure, queries must join the right tables in the right way to extract something meaningful and easy to use (see left side of Figure 1 above). DV “comes out of the box with” queries to create SQL views that can do some of that work. However, these will probably not meet every need, or even most needs. Plus, all that joining can lead to complex and slow queries.

- Data Size: DV may generate massive tables, and queries on those tables may generate even huger results. Sharing giant volumes of content may be difficult, impractical, or undesirable in some cases.

- Institutional Policy and/or Licensing Limitations: An instance of DV may use or contain content associated with policies or licensing that limits sharing of that content.

- Transparency: Receiving a dataset without supporting information about how it was generated may not meet everyone’s needs. Some may want transparency into how the dataset was generated.

- Re-Generation: As knowledge, terminologies, and LLMs evolve, it may be desirable to re-generate the dataset using new terminology versions, better LLMs, and/or modified DV configurations.

To help address these issues, I’ve given DV some new features (in version v0.0.11-alpha).

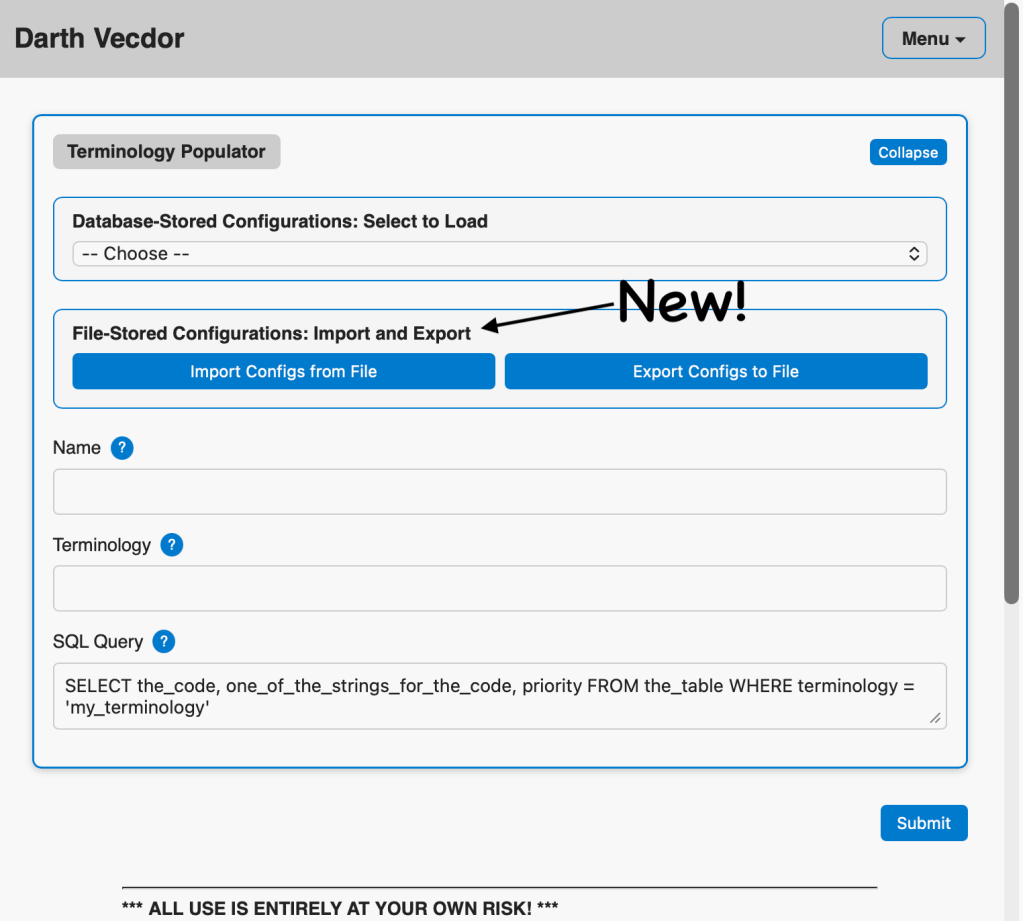

Export and Import of DV Configurations

Each “configuration form” currently on the DV user interface (e.g., for loading a terminology, creating a code set, or generating a set of relationships) now has buttons for exporting and importing the form’s contents (see Figure 2 below). With this, instead of (or in addition to) sharing the DV database content, one can export the configuration that created that content as a file and share it with others. Recipients should be able to import and run the file, as long as DV and its associated PostgreSQL database is otherwise set up as needed (e.g., their DV is able to work with the new configuration’s required LLMs, access required external data sources, etc.). If desired, recipients can also use the DV user interface to edit the imported configuration prior to running it.

Figure 2: New Import and Export Buttons

This new export and import functionality should help address all of the above noted issues. I can imagine (hope for!) a future in which people create and share DV configurations, even developing DV configuration libraries to share amongst themselves or with the world. A group of related configurations might be used to build the knowledge base required to power an app. Websites aggregating lots of these “configuration groups” would serve as “app libraries” or “app stores” that might enable widespread scaling of knowledge base-driven apps.

Hypothetical, FICTIONAL, Aspirational Export/Import Example

Sally is at Jeneral University Hospital (JUH). She used DV to help create a knowledge graph of clinical indications for thousands of their most commonly used lab tests. She had an idea to use the knowledge base together with other JUH analytics, such as predicted volumes of patients having various clinical conditions, to better anticipate lab supply and resourcing needs. On testing of the idea, she found it substantially improved lab turnaround times while also lowering costs!

Sally’s colleague, Joe, works at the Spesifik College Healthcare System (SCHS). Joe heard about Sally’s success and wants to try something similar at SCHS. He asks Sally for a copy of her knowledge base. Unfortunately, Sally says that sharing JUH’s DV-generated knowledge base with SCHS is functionally impossible because JUH’s policy for that sharing is unusually restrictive. It requires unanimous consent from a committee composed of expensive legal consultants and two dozen top JUH leaders. However, Sally notes that the policy has no such restrictions with regard to DV configurations. In fact, JUH policy encourages such sharing! So, Sally exports the relevant configurations and sends them to Joe. Joe imports them, makes a few changes to suit SCHS-specific needs, and uses it to generate an SCHS version of the knowledge base. Joe’s testing of the integrated solution finds sizeable benefits!

Sally and Joe collaborate on an academic article, reporting their methodology and objectively measurable successes. Their paper includes a link to their exported configurations. The article is widely read, and health systems around the world use the configurations as starting points for their own implementations. This becomes a worldwide best practice among labs, improving service and cutting healthcare costs across the globe.

Building on this success, Sally and Joe put together a collaborative of peer institutions. The collaborative develops hundreds of DV configurations that, collectively, generate vast knowledge bases. Each configuration has one or more associated use cases that have been proven to create benefit for the institutions, their staff, and the patients they serve. The collaborative hosts a use case library website. Each use case in the library includes information about its purpose, instructions for its implementation, the associated DV configurations, and links to other required resources. The library’s use cases lead to such impactful benefits that Sally and Joe win the 2037 Nobel Prize for Medicine. 😀

Aspirational? Obviously! But a person can hope… 😀

Batch Custom Table Generation

Instead of querying the “normalized” DV tables directly, you may wish to save the results of a query as a new, flattened, “denormalized” table (see left vs. right side of Figure 1 above). This is often called “materializing” the query. If the original query was slow, queries against the new table will often be much faster. Also, the data in the resulting table can generally be easily exported, such as into a comma-separated values (CSV) file. Depending on the content, most other databases (e.g., SQL Server, Excel, Access, Oracle, Snowflake, MySQL, etc.) can easily import CSV files. Even Microsoft Excel can usually load and work with a CSV file directly (as long as it doesn’t exceed Excel’s size limitations). This may facilitate the sharing and real-world use of DV-related data.

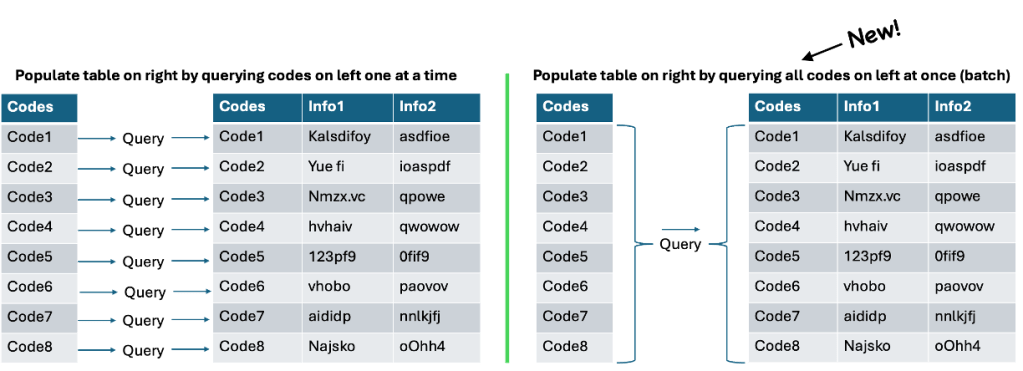

On its initial release, DV already had a mechanism to create custom tables (“materialized” query results) by repeatedly (but automatically) running a query, once for each code in a DV “terminology” or “code set” (see left side of Figure 3 below). However, in some cases it may be much faster and more efficient to run the query as a “batch” (populating the entire table in a single query rather than one query per terminology code — see right side of Figure 3 below). The just-released version of DV now offers both approaches (one-query-per-code and “batch” mode) to create new custom tables.

Figure 3: Populating a materialized table one code at a time vs. all at once in a batch

Conclusion

I aim to write future blog posts describing actual, planned, or theoretical DV use cases. The new DV functions described in this post for sharing DV configurations and/or data will hopefully make it easier for me to share examples of the use cases described in the posts. More importantly, the new functions may also facilitate the sharing and, where appropriate, implementation of beneficial use cases by a larger community.

I wouldn’t count on anyone winning the Nobel Prize for sharing their use cases built with the help of DV 😀, but I do have hope that DV will help people create solutions that make a positive impact. Sometimes even the tiniest change in the right direction can bring major benefits. In other words, DV may help people in their work as… “Zero Effectors!”

Epilogue: Important Warnings, Caveats, and More

While I hope Darth Vecdor can provide value in many areas, Darth Vecdor is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. Any use is entirely at your own discretion and risk (and, of course, the risk of those for whom you have responsibility). Read the license and other information on the Darth Vecdor GitHub site, in its code, and on its user interface if it is run. Nothing here should be construed as superseding the license terms. Nothing here should be construed as medical advice. You need the appropriate expertise and associated due diligence to safely, effectively, and appropriately use Darth Vecdor. There is no assurance that Darth Vecdor or any of its outputs meets or will meet any or all needs for any use. I have discussed its potential use in the medical domain, but that does not imply it is safe or appropriate for any use in healthcare or any use at all. Darth Vecdor is highly configurable, so suitability or insuitability for any use also may relate to your configuration and use of the system. I tried hard to build it with quality, but you should assume it has serious bugs and design flaws, and the system surely lacks critical functionality. Depending on whether and how it, and/or its outputs, are used, Darth Vecdor and/or its outputs could lead to dangerous outcomes. It’s entirely up to you assess and validate its suitability (or the suitability of its outputs) for any purpose whatsoever. If there are disclaimers that I should have put here but didn’t, please imagine that any and all such disclaimers are here.

All opinions expressed here are entirely those of the author(s) and do not necessarily represent the opinions or positions of their employers (if any), affiliates (if any), or anyone else. The author(s) reserve the right to change his/her/their minds at any time.

Leave a comment